Composing for video games is an often an overlooked and underappreciated area which can transform a mediocre game into game of the year. If you’re interested in how to compose for any genre of game then these are some must know tips to get you started.

1. Work With The Sound Designer

“If you’re not doing the sound design, find out who is and buy them a drink.”

When writing music for a game, just like film, you’re going to be battling with the sound design in game. Many composers on indie game budgets are multitasking, creating and even implementing the sound design as well as the music.

If you’re only onboard as a composer though, then a good relationship with the sound designer will help massively.

This is how you want your sound designers to look all the time! [Ari Pulkkinen – Angry Birds]

As film composers learn very quickly, anything they put in their stems that clash with the sound design and more importantly the dialogue, their music will just get turned down for the benefit of the film overall.

Try your damnedest ask to hear/see/play any footage with the sound design in game ASAP. You’ll connect with the game far better and it will no doubt inspire you when writing for that project.

2. Ear Fatigue Is Real

We’ve all had this. You start a game and this catchy piece of music begins to get into your head and you’re tapping your foot in perhaps a way a dad would, when hearing his favourite hit at the wedding you all went to last summer.

The problem is, this piece of music is only 30 seconds long and it’s repeated 14 times already and you’re still getting used to the controls. You’ve now vowed to never play the game again and have gone slightly mad in the process.

If you’ve not read Winifred Phillps engaging book “A composer’s Guide to Game Music” then I suggest you click immediately. There’s a wonderful piece of advice where she states that one way to avoid ear fatigue is for a single piece of music to be hitting at least 5 minutes in length before looping around again.

Keep in mind how the players can explore the levels when writing the music for them

Game levels with a structured time limit won’t usually come under this, but let’s take an open world game where the player is allowed the freedom to go wander off where the wish. The player might perhaps explore a certain area for say 10 minutes.

Now, even a piece of music lasting 3 minutes would have gone round 3 times in that period and “could” start to annoy the player. So a longer piece is simple way of avoiding ear fatigue on the player. Luckily though, there’s an even more clever way to combat this problem…

3. Adaptive Music

Since the early days of adaptive engines like IMUSE built by Lucasarts Michael Land & Peter MCconnell and written for Monkey Island 2, many great video game composers now use adaptive music to great effect when writing for video games.

Creating multiple dynamic layers in their music so that it will change depending what the player does in game, is a tremendous way to immerse the player.

Here’s a very simple example of what could be done. I must stress, things can get even more complicated in a fantastic way but hopefully this gives you some idea.

Just like any piece of music we write in our DAW, we can solo or group sections of our mix. We can use this method to create layers and more importantly think about how we write for a game using adaptive music.

The mix could be broken out and stemmed like this:

Layer 1 Low intensity: Drums, Percussion

Layer 2 Medium intensity: Brass, Woodwind, Strings that play chords or supporting melody

Layer 3 High Intensity: Main melody on Strings/Brass with Choir shouting same random shizzle

The first “low intensity” layer would be played as a warning to the player that danger is near.

When, say, the humongous robot that wants to smash your brains open charges at you, the second “Medium Intensity” layer kicks in and your battle with the Tin Man commences.

The first two layers are now playing together as one and the intensity has risen from Low to Medium. When the whole army of Robots turn up and want to party like it’s 1999, then the 3rd layer will arrive and play in sync with layers 1 & 2 .

Red Dead Redemption has been praised for having one of the most Adaptive soundtracks.

Hey presto, you’ve got your full track playing, but it’s having an even bigger impact than it would if you’d just had it all kick in from the start. But hang on…this is all well and good, but how do you implement it?

Well the best way, arguably and it’s only going to get more prominent my friends, is…

4. Middleware

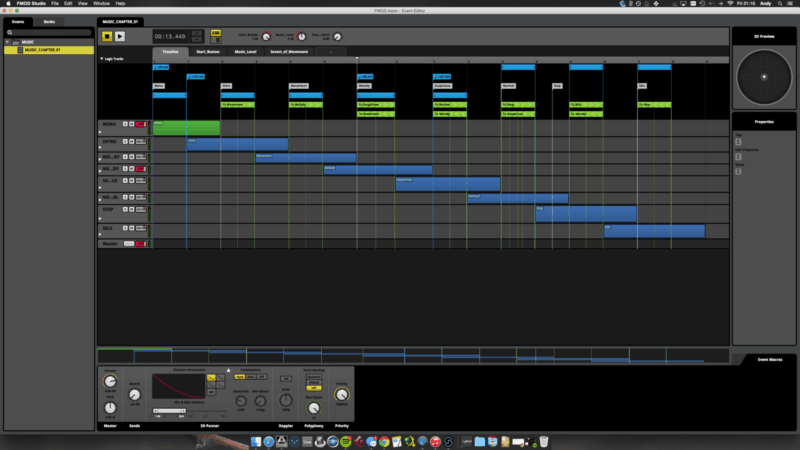

FMOD & WWISE are two names you’ll constantly see cropping up when you’re trawling the net to find out more about composing for games.

I expect many of you have even download them, tried them for an hour and then scratched your head. They’re powerful programs and I’ll admit to chatting to many composers working in the video games industry that have still yet to use them.

What’s clear though, is that it can only benefit you having some knowledge of how these work.They are the link between your DAW and the game engine the developers are using (Unity, Unreal e.t.c).

Essentially Middleware puts the power back into hands of the composer/sound designer rather than the coder and you can choose how your audio is triggered and reacts within game.

Both programs have their pros and cons but both can do the same job – try them out and see which one you prefer!

The adaptive example I used above is only a taster of what can be done. Jason Graves (Deadspace, Tomb Raider 2013, Fay Cry Primal) effectively uses real time FX such as Distortion and Delay to blend in and out in real time on his audio in WWISE.

Multiple layers of audio drop in and out at any given point and it really does show how more complex composing for games can be compared to working in film.

It’s a grey area for upcoming composers trying to get into the games industry. The higher the budget, the more likely there will be someone implementing the audio, The composer will focus on writing the music and delivering stems. Indie projects on smaller budgets often require more of you.

Whether you are using the tools or not, it will highly benefit your career if you have an understanding of what will be done with your stems and how they might be manipulated. It will also improve your writing skills for games, and in general. As a freelance composer, every project you’re involved with will be different.

Both FMOD & WWISE have demo’s you can download and Thinkspace have just released an FMOD in a weekend course which you can see here. The Sound Architect is also a great resource to keep up with all things Game Audio.

5. Referencing

Most of us are not blessed with acoustically treated rooms or even a set of monitors for that matter. Some happily mix on headphones. Alan Meyerson (Hans Zimmer, Uncharted 4, Metal Gear solid) does exactly this for video games as he knows that most players either listen on headphones or laptop speakers.

The most important thing you can do to improve your ear and mixing skills is to listen to something that you know sounds great. Sounds so obvious.

Mixers/Engineers in the past would create a reference CD with their favourite mixes that they know inside out. They could play that CD in any studio in the world and it would help them understand the acoustics of that mixing room, thus massively helping them when it came to mixing the project they were recording on.

In Logic, I still bounce and automatically add my mix to iTunes so I can listen against the big hitters, while making sure I have “soundcheck” option ticked in iTunes, so they will more play more or less at the same level.

In the past year or so reference plugins have started to filter though into the marketplace.

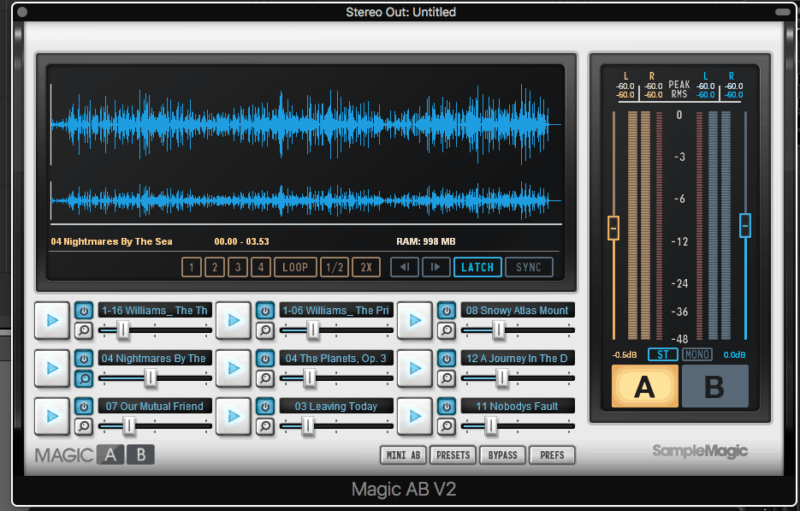

By far my favourite plugin I’ve ever bought is MAGIC A/B which sits on the Mixbus.

You can upload up to 9 songs in the GUI and click between your mix and any of the references. It’s an invaluable tool and although you have to make sure you level match with their volume controls, you can listen, compare and learn so much about your Balance, EQ, Reverb, Compression.

Magic A/B is a great piece of software for comparing up to 9 different sources

I tend to mix/compare references in mono so having the F1 button assigned as the mono button on my iD22 massively helps my workflow.

Another little piece of advice is to start reading up about LUFS metering which is becoming more of an industry standard when it comes to levels in gaming and music recordings in general. Mastering Engineer Ian Shepherd explains things quite nicely here.

6. Deadlines

This is a simple one. Creating deadlines for yourself even before you even get the paid work is a must. There’s a ridiculous amount of talented musicians out there creating ideas and not finishing them.

Trust me, you are better off having 20 badly finished pieces of music, freeing up your brain to creating something new, rather than sitting on the best idea you’ve ever had for a year which you can’t finish and move on.

Although video games tend to have a much longer period to write and finish music compared to film and TV, it’s worth getting into this mindset now. You don’t want to get a reputation for being unreliable. In the words of Mortal Kombat: